Nitro-Powered Glam and Styling Like a Magic Unicorn

Nitro Modules: The JSI-Powered Speed Machine

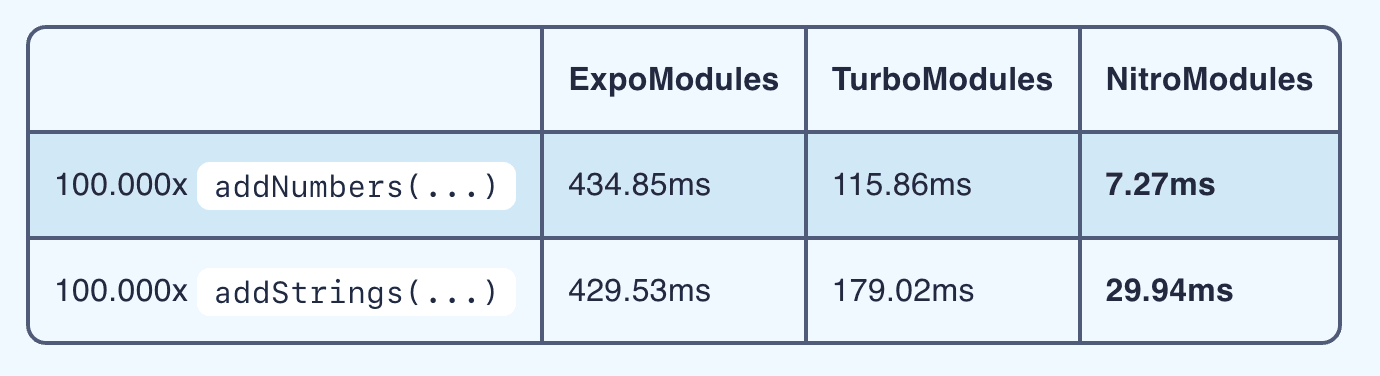

Nitro Modules have been making waves in the React Native community, and it’s not just because @mrousavy and the Margelo team are churning out libraries faster than a CI pipeline on a good day. For those who’ve been hiding under a rock, Nitro is a framework for building high-performance native modules in C++, Swift, or Kotlin, using a statically compiled JSI (JavaScript Interface) binding layer to connect directly with React Native’s JavaScript runtime. Compared to Turbo Modules, Nitro stands out with its Nitrogen code generator, which transforms TypeScript interfaces into type-safe native bindings. Nitro’s benchmarks claim it’s up to 15x faster than Turbo Modules for basic operations and 59x faster than Expo Modules.

This week, Nitro’s been flexing its muscles with three shiny library releases that have the community whispering “game-changer” between sips of overpriced oat milk lattes.

First, there’s react-native-nitro-image, developed by Marc Rousavy himself—a name that’s quickly becoming synonymous with “please make native modules less painful.” This new library processes images entirely in-memory, bypassing file I/O for ultra-fast resizing, cropping, and manipulation. We predict, it’s set to integrate with VisionCamera, which will also adopt Nitro, making the entire imaging pipeline—from camera capture to display—fully native and blazingly fast.

Next, react-native-video v7.0-alpha is more than a refactor—it’s a reinvention. The team at @WidlarzGroup rewrote the entire thing to support Nitro preloading. That means video data is decoded into memory before it’s needed. The player-view is now decoupled from the actual video decoding, opening up all kinds of use cases: think invisible players for thumbnail previews or pre-cached streams without any black frames.

Finally, react-native-healthkit@9.0.0 by @kingstinct quietly made a big move: it’s now a Nitro Module too. This shift reduces native bridge overhead, which matters more than you’d think when querying HealthKit data across many categories. The new APIs are leaner, cleaner, and fully typed end-to-end. If you’ve ever tried wrangling HealthKit manually—wading through Objective-C, Swift interop, or oddly formatted timestamps—you’ll understand what a win this is.

Unistyles 3.0: Styling That Skips Re-Renders

Unistyles 3.0 has landed with a stable release, and it’s like the React Native styling library got a PhD in efficiency.

Unistyles makes it easy to handle themes and screen sizes in your app. You can define both a light and dark version of your design. It also helps with responsive design with breakpoints. You can set rules like “use bigger text on larger screens” or “add more padding on tablets,” and Unistyles will take care of switching things based on the screen width.

And if you’re building for web, Unistyles converts your styles into fast, web-friendly CSS under the hood. You don’t need to write different code for native and web—just write your styles once, and they work everywhere.

import { StyleSheet } from 'react-native-unistyles'; // Define stylesheet with theme and breakpoint support const stylesheet = StyleSheet.create((theme) => ({ container: { flex: 1, justifyContent: 'center', alignItems: 'center', backgroundColor: theme.colors.background, padding: theme.padding, // Breakpoint-specific padding variants: { breakpoint: { xs: { padding: theme.padding * 0.5 }, // Smaller padding on extra small screens sm: { padding: theme.padding * 1.5 }, // Larger padding on small screens md: { padding: theme.padding * 2 }, // Even larger padding on medium screens }, }, }, text: { color: theme.colors.text, fontSize: 18, }, }));

Crafted by @jpudysz and powered by Nitro Modules, this C++-driven powerhouse recomputes only the styles that change—say, when the theme or screen size shifts—without dragging your entire app through a re-render. It’s tightly woven into the New Architecture (Fabric) and React Native 0.78+, so if you’re still clinging to the old bridge, you’ll need to stick with Unistyles 2.0. Think of it as CSS on the web: update a style, and only the affected components refresh.

Cyberpunk Chic and Offline Brains

React Native Skia 2.1: Lottie Gets a Glow-Up and 2D Scenes Get Perspective

The React Native Skia team, led by the ever-productive folks at Shopify and contributors like @wcandillon, has dropped React Native Skia 2.1. This release builds on Skia’s 2D graphics prowess with built-in support for Lottie animations via Skottie.

Unlike react-native-skottie, which runs in a native view outside the Skia canvas, Skia 2.1 introduces Skottie—a native Lottie engine baked into Skia itself. That means Lottie animations are now just another Skia drawing primitive: you can layer them with drawText, drawImage, or even custom shaders. The result? Seamless, performant visuals that ditch the old “two rendering engines on a unicycle” vibe and bring everything into a unified, GPU-accelerated pipeline.

import { Canvas, Group, Skottie, Skia } from "@shopify/react-native-skia"; const legoAnimationJSON = require("./assets/spaceship.json"); const animation = Skia.Skottie.Make(JSON.stringify(legoAnimationJSON)); const SkottieExample = () => { return ( <Canvas style={{ width: 400, height: 300 }}> <Group transform={[{ scale: 0.5 }]}> <Skottie animation={animation} frame={41} /> </Group> </Canvas> ); }

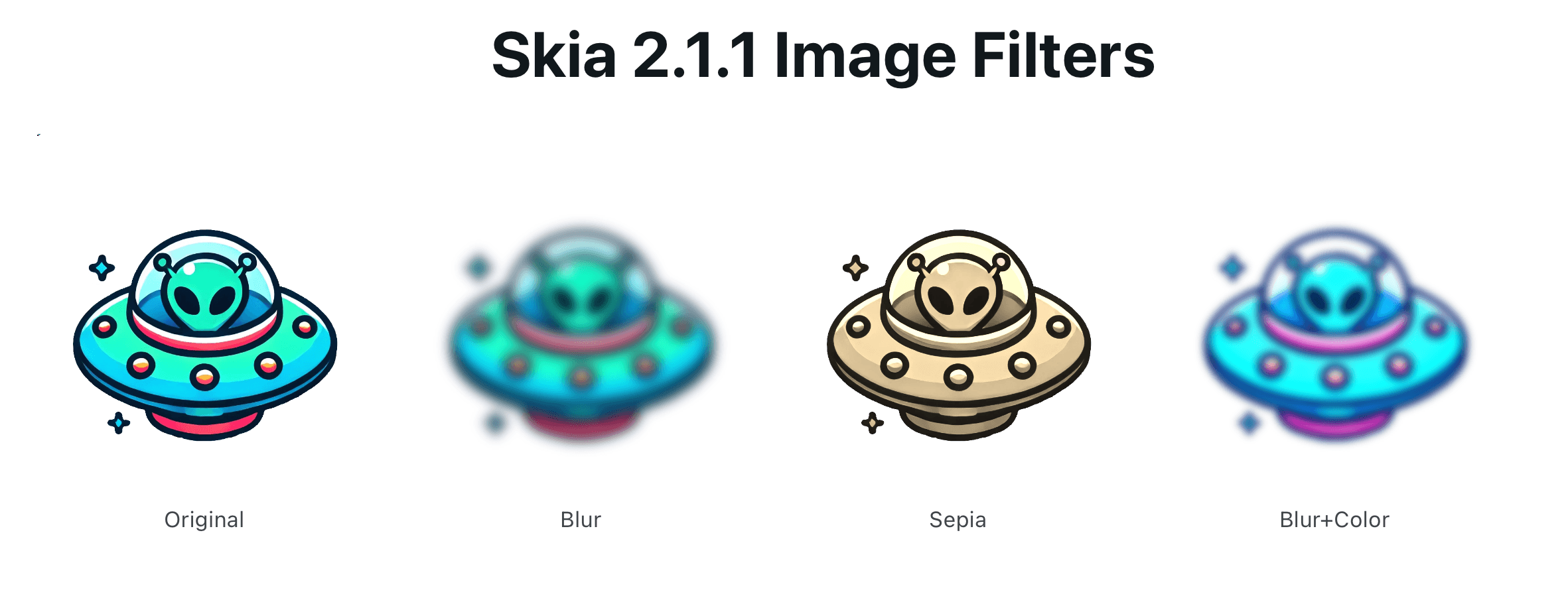

Skia 2.1 also introduces image filters and a perspective camera API for 2D scenes—features that unlock a whole new level of visual depth and realism. Image filters let you apply effects like blurs, color shifts, drop shadows, and more directly to any drawn element on the canvas. These filters are GPU-accelerated and composable, meaning you can stack them or animate them without breaking a sweat.

The new 2D camera brings a pseudo-3D perspective transformation, letting you tilt, rotate, or scale layers as if you’re viewing them from different angles. The core idea is to simulate 3D depth without leaving the 2D rendering context, giving developers more cinematic tools for transitions, parallax effects, or UI flourishes.

While examples are still thin on the ground, we understand this is fresh off the press. However, we couldn’t quite get this feature to work in react-native-skia, so if anyone out there has it working—slide into my DMs, will ya? 😉

React Native RAG: AI That Thinks Local, Not Cloud

The folks at @swmansion—led by @jakmroo—have dropped React Native RAG, a new library that gives your React Native app real, on-device intelligence powered by Retrieval-Augmented Generation (RAG). Unlike typical LLM setups that just “guess” based on what they were trained on, RAG lets your app search its own documents for the right info—then use that to answer the user’s question, all offline.

With seamless integration with React Native ExecuTorch, it adds a full RAG pipeline: your app can take docs, chunk them into sections, embed them into vectors, and store them locally for fast retrieval. When the user asks something, React Native RAG finds the most relevant pieces of your content and feeds them to the LLM—giving you answers based on your data, not just the model’s vague memory.

import { useRAG } from 'react-native-rag'; import { ExecuTorchLLM, ExecuTorchEmbeddings } from '@react-native-rag/executorch'; import { MemoryVectorStore } from 'react-native-rag'; const App = () => { const { isReady, generate } = useRAG({ llm, vectorStore: new MemoryVectorStore({ embeddings }), }); // ... your component logic };

That means your app can handle questions about things like your help docs, onboarding content, support guides, or personalised info—even without an internet connection. All while keeping user data private and infrastructure costs at zero.

It’s modular, lightweight (under 150kB), and production-ready. Whether you’re building a support bot, custom Q&A, or offline knowledge base, React Native RAG makes it feel like your app finally knows what it’s talking about.

Before you Go…

React Native Legal: Covering Your Backside on Licenses

If you’re like most React Native developers, you’ve probably never built a license attribution screen. Your apps are a stack of open-source dependencies.

Here’s the deal: Apple’s App Store Review Guidelines (Section 5.1) require compliance with all legal obligations, including intellectual property laws, which cover open-source licenses like MIT or Apache that often mandate including a copyright notice. Skipping this can breach those licenses, risking legal headaches or, worst case, app rejection.

Enter React Native Legal from @callstackio, a zero-config tool that scans your JavaScript and native dependencies and generates a native-style license screen for iOS, Android, tvOS, and AndroidTV. It’s a no-brainer for keeping your app compliant without manually digging through node_modules like an digital archaeologist.

The CLI works for bare React Native apps:

npx react-native legal-generate

Or an Expo config plugin that handles managed workflows:

{ "expo": { "plugins": [ "react-native-legal" ] } }

Then use it in your code:

import { Button } from 'react-native'; import { ReactNativeLegal } from 'react-native-legal'; const LicenseButton = () => ( <Button title="Licenses" onPress={() => ReactNativeLegal.launchLicenseListScreen('Open Source')} /> );

Got a custom UI in mind? The @callstack/licenses API outputs license data as JSON, Markdown, or text. It’s package-manager agnostic (npm, Yarn, Bun) and perfect for enterprise apps or monorepos where compliance isn’t optional. For those who’ve been winging it, this tool is your insurance policy against the day Apple or a litigious library author decides to care.

Run it, ship it, and thank Callstack for making license compliance as painless as a clean build.