Issue #9

Okay, so React Native 0.79 isn’t going to make you feel like you’re starring in a Hollywood blockbuster. But it’s not here to be a snooze fest either.

First off, Metro got a serious upgrade. You’ll notice a 3x faster cold start now, meaning you won’t have to watch your app load like you’re in some never-ending buffering nightmare. This is thanks to deferred hashing (waiting to do a slow task until later—only if it’s really needed). As a result, CI builds and your local dev workflow are now faster, and that’s a good thing for your productivity—and sanity.

But before you get too excited about Metro’s speedup, let’s not forget about the Re.Pack vs Metro arms race. Re.Pack 5.0 (a Metro alternative) is out here throwing punches with its blazing fast bundling (we covered it in Issue #7), and it’s running on Rspack—a bundler written in Rust (Rust: the systems language that’ll make you feel like your computer actually works for you). So, while Metro’s cold start is looking good, the battle between these bundlers is far from over.

JavaScriptCore (JSC) (the JavaScript runtime which runs React Native, before Hermes) is officially moving to a community-maintained package. If you’re still holding onto JSC for dear life, well, this is your final warning—it’s time to let the community take the wheel. This change won’t affect Hermes users (but eh… let’s be real… sadly who is actually migrated to Hermes yet?). If you still want JSC, you’re going to need to install it manually from the community repo. The React Native core will still provide JSC in 0.79, but it’s being phased out—so consider this the last time it’ll come bundled with the framework.

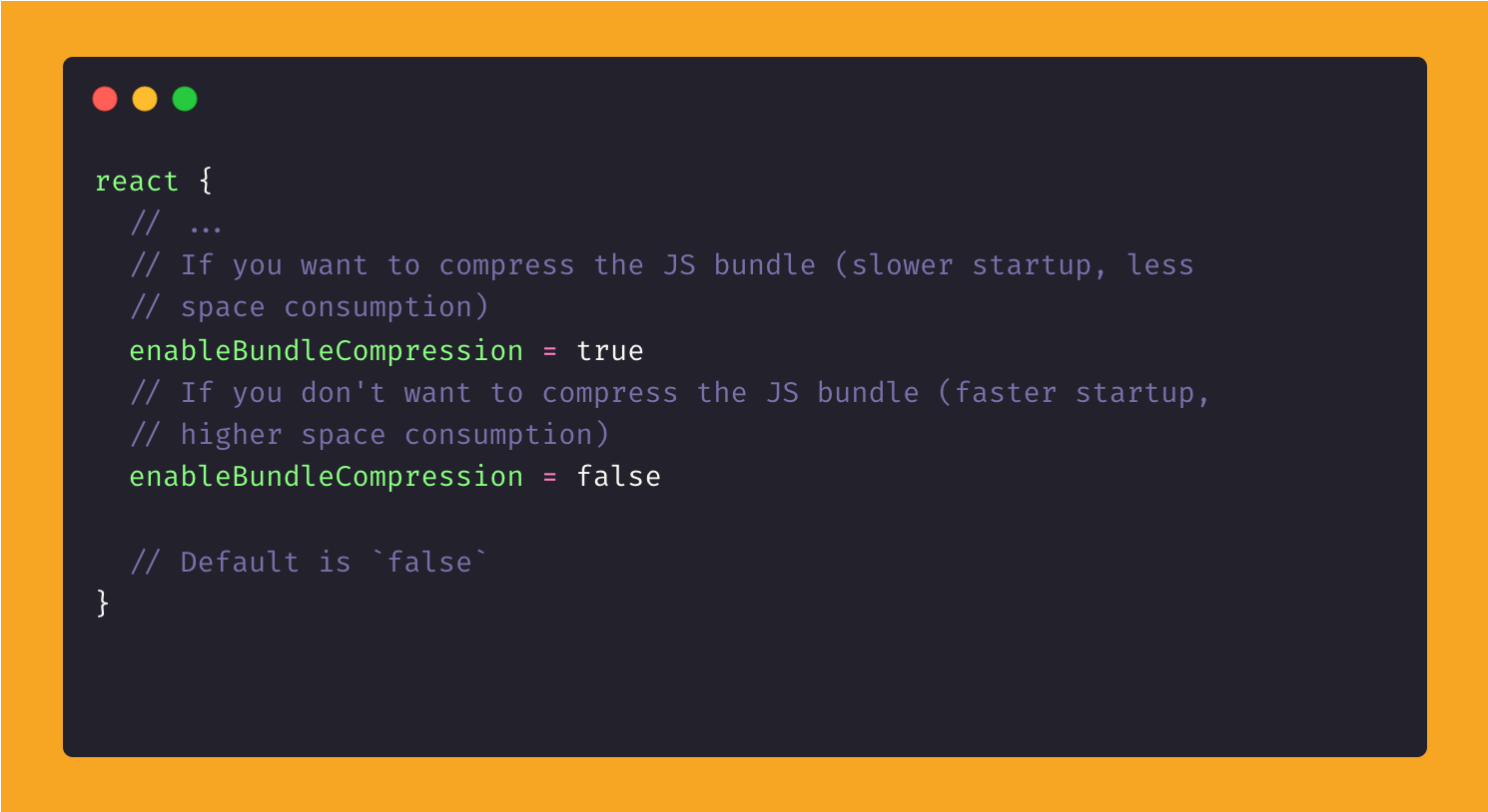

Now, let’s talk Android. There are some improvements to your app startup time by uncompressing the JavaScript bundle in the APK. This means faster app launch because Android no longer needs to decompress the bundle every time the app starts. However, this comes at the cost of increased APK size, so if you’re running into storage issues, you can toggle this behavior using the enableBundleCompression property in your app/build.gradle. You’ll get a speed boost (12% faster on Discord—no, seriously), but if that extra space matters to you, you have control. (This change is the result of a PR from @mrousavy, which we covered in Issue #7, in case you missed it).

And now, the moment we’ve all been waiting for: Remote JS Debugging is dead (you know pulling up your chrome window and debugging that way). You’ve probably seen its slow demise since 0.73, but now it’s officially gone. It’s time to say goodbye to the Chrome debugger (we’re not bitter. Nope). If you were still holding onto this, it’s time to move to React Native DevTools or other third-party debugging tools like Expo DevTools.

So, while you won’t be writing home about this release, it’s definitely one to welcome. Less re-renders, more stability, faster builds, and smoother debugging. Thanks to Alan Hughes, @sg43245, @fabriziocucci and @cortinico.

The Expo SDK 53 Beta is here. The beta runs for two weeks, giving developers the chance to test the new release and ensure that their app configurations aren’t causing any regressions. While things are still in flux during this period, expect continuous fixes and improvements, some of which might even involve breaking changes.

First, Expo SDK 53 ships with React Native 0.79 and React 19 which brings support for Suspense for loading states, along with use for contexts and promises, which we already covered in Issue #6. It’s a whole lot of improvements for managing async operations and more streamlined component rendering.

In SDK 53, React Native’s New Architecture is no longer an opt-in for existing projects—it’s the default. So, if you’re not ready to go all-in on the New Architecture, now’s it’s time to make a decision, because you’ll need to opt out. (You know, that thing you can’t escape—death, taxes, and the React Native new architecture).

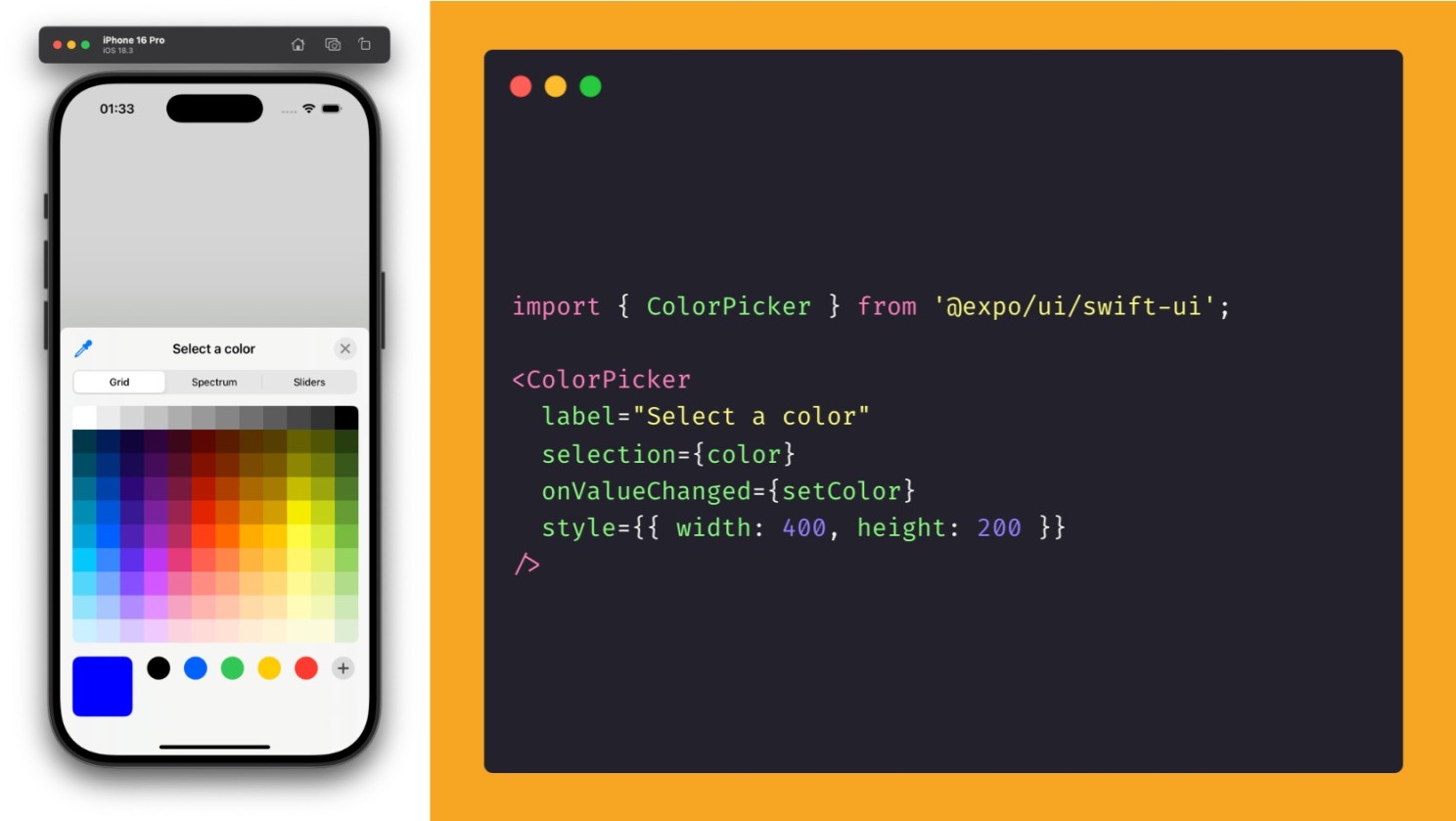

Next up, there’s a game-changer on the horizon for UI components 🥁

Expo UI, an experimental new package, allows you to integrate native UI components from Jetpack Compose and SwiftUI. While still a work-in-progress, Expo UI promises to make it easier to use native UI components, like toggles, sliders, context menus, and more, in your apps. This is a big step toward bridging the gap between native mobile development and React Native’s cross-platform approach. Keep in mind, this package is still in its infancy and might change rapidly, but if you’re feeling adventurous, it could bring some serious flexibility to your projects.

Who doesn’t love a little experimentation?

For those of you using react-native-maps, there’s a new development in the form of expo-maps—currently in alpha. The new package provides wrappers for the platform standard APIs for maps, including Google Maps for Android and Apple Maps for iOS. However, note that expo-maps doesn’t support Google Maps on iOS, so this might be more useful for projects that want a consistent experience between Android and iOS.

There’s also some cool stuff around build caching. SDK 53 introduces experimental support for caching local builds. This means that on subsequent runs, you and your team will be able to download previously built versions of your app. This feature is tied to EAS (Expo Application Services). The result?

You won’t have to wait around for your app to recompile every time you make a minor change—just a small time-saving win, but one that stacks up. If the cache is a miss, it’ll fall back to compiling locally as usual, then upload the archive for future runs.

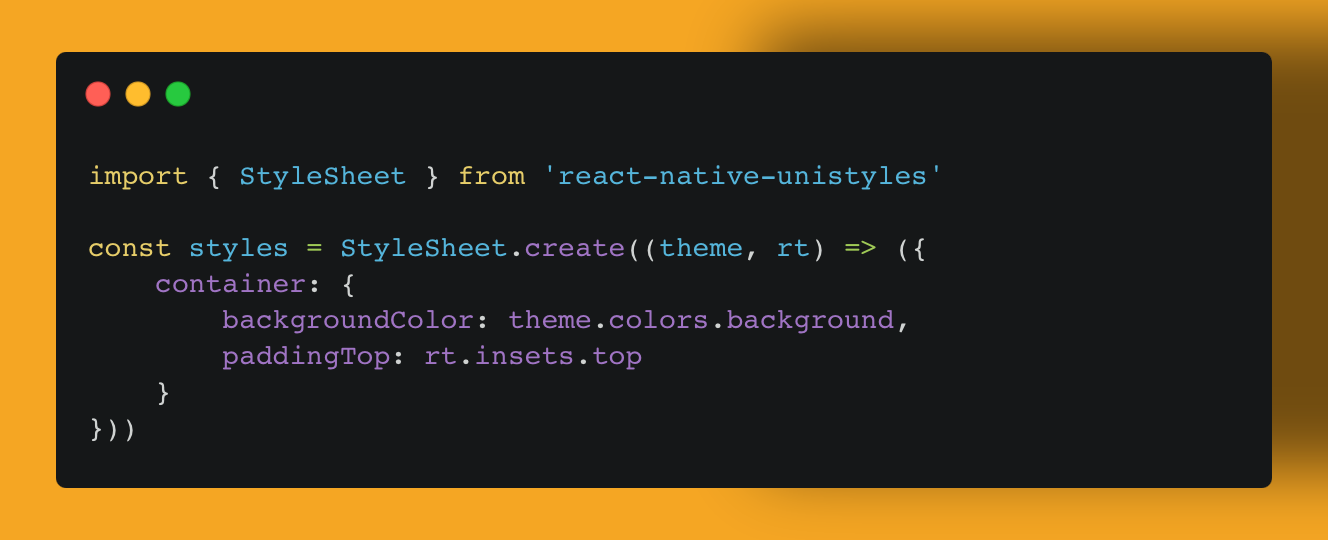

Unistyles 3.0 RC.1 just dropped at the React Native Connection Conference, and it’s already stirring things up like the first sip of coffee on a Monday morning. The library is officially in release candidate status, and it’s packing a punch. The big win here?

It’s tackling dynamic styles in the Shadow Tree using C++. What does that mean for you? Well, no more unnecessary re-renders when things like the theme, signal, or screen size change—just like CSS on the web.

What’s really cool about this version is that it’s taken the CSS-like styling you love and mixed in C++ to make things work seamlessly in the background. This means you don’t need React to step in every time something changes. That’s a major boost in efficiency and smoothness. If you’ve ever had to battle with React’s re-renders (and who hasn’t), this should feel like a relief.

And let’s not forget, Unistyles 3.0 RC.1 is going all out with Skia Canvas support (a browser-less implementation of the HTML Canvas drawing API), which is a high-five to WebGL (Web Graphics Library, is a JavaScript API for rendering high-performance interactive 3D and 2D graphics). Plus, it’s got SSR improvements, making server-side rendering smoother than ever.

Oh, and it plays nice with Xcode 16.3 and Windows—because no platform can be left behind these days.

This release is packed with a shit-ton of improvements. We’ve got everything from better theme handling on the web, numeric keys for variants, and updates to Reanimated and Gesture Handler auto-process paths. Sounds like Unistyles is growing up—becoming the stylish, more mature sibling of the React Native world. Thanks to @jpudysz for this awesome package.

“I am a” Legend List 1.0 is here, and no, it’s not promising to cure a mutant plague that’s wiped out most of humanity—except Will Smith and his dog. Unless that plague is slow, janky lists in React Native… in which case, yeah, this might be the cure.

Legend List by @jmeistrich is built to be a drop-in replacement for your traditional FlatList, and it brings a few interesting features to the table. He just dropped version 1.0, which I guess means it’s ready for production? Who knows.

One of its standout features is maintainVisibleContentPosition—a bit of black magic you’d never expect in a list library. This deceptively simple boolean keeps your lists from jumping around when items are added, removed, or updated—basically anything that changes the size of the list or its items—and prevents scroll position from getting messed up.

If you’ve ever dug into lists as much as I have, then you know maintaining scroll position reliably when the list changes is a royal pain in the ass.

Legend List also claims to support items of different sizes “natively” (whatever that actually means), without noticeable performance hits. That said, you’ll still need to provide a getEstimatedItemSize—their docs, not ours.

Anyway, they’re claiming Legend List is the fastest list out there. And with a few extra features, it may not sound like a huge deal—but as a drop-in replacement, it’s honestly not a bad return on investment. See for yourself below (FlatList, FlashList 1.0 and LegendList), Legend List renders the content much faster with less choppy behaviour.

FlashList 2.0 has been completely rebuilt, shifting to a JS-only solution. This change leaves old complexities behind, especially around layout management and item estimates. However, while it promises improved performance, it’s still in alpha, so expect some bugs and potential issues.

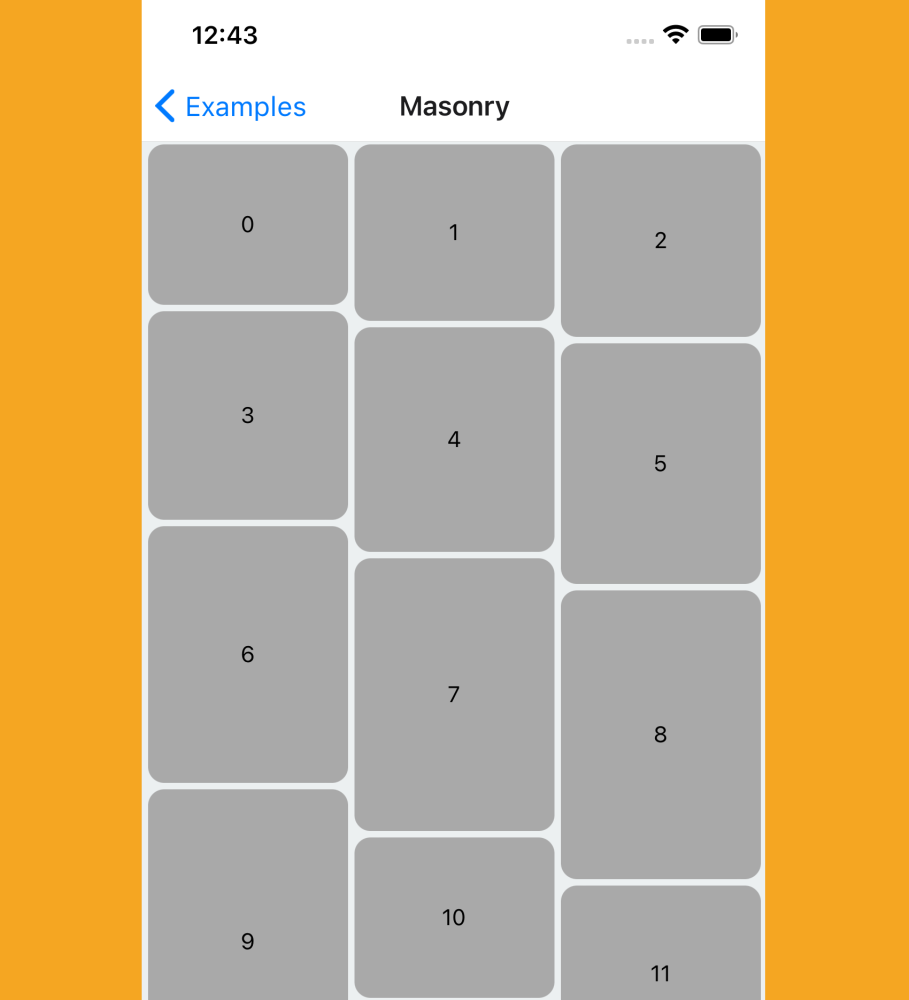

One of the standout new features in FlashList v2 is the addition of masonry as a prop. Now, you can work with masonry layouts (a grid of items with different heights) without needing to rely on custom hacks or additional configuration.

FlashList also introduces maintainVisibleContentPosition, a feature that’s now enabled by default. This keeps your list from jumping around when items are added or removed, providing smoother scrolling experiences, especially for real-time apps like chat interfaces where stability is key.

Another new feature in v2 is the onStartReached callback, with a configurable threshold, allowing for more control over the list’s behavior when you hit the start of the list—perfect for triggering actions or loading data. And yes, RTL (right-to-left) layout support is now included, expanding FlashList’s global accessibility.

Now, FlashList is also packing web support. While it’s still not fully tested, you can expect most of the features to work seamlessly on the web too. Web support in FlashList makes this a truly cross-platform list solution, handling everything from mobile to desktop with ease.

Shopify’s team clearly felt the heat from Jay Meistrich’s frantic race to cure slow lists with Legend List, and they’ve responded in full force with FlashList v2. With more features, less configuration, and a cleaner approach to handling dynamic lists, it’s a strong contender. But the war of the lists? Is not over. For now.

Okay, I know I’ve gone on about Radon (previously React Native IDE) sounding like the third Cramp Twin (from The Cramp Twins, that TV show from the 2000s) but we’re officially in the middle of season one—wait, scratch that. It’s Radon 1.5. If you thought Radon IDE couldn’t get any better, think again—this update is like that pivotal mid-season episode where everything starts to make sense, and you’re left wondering why you didn’t see this coming.

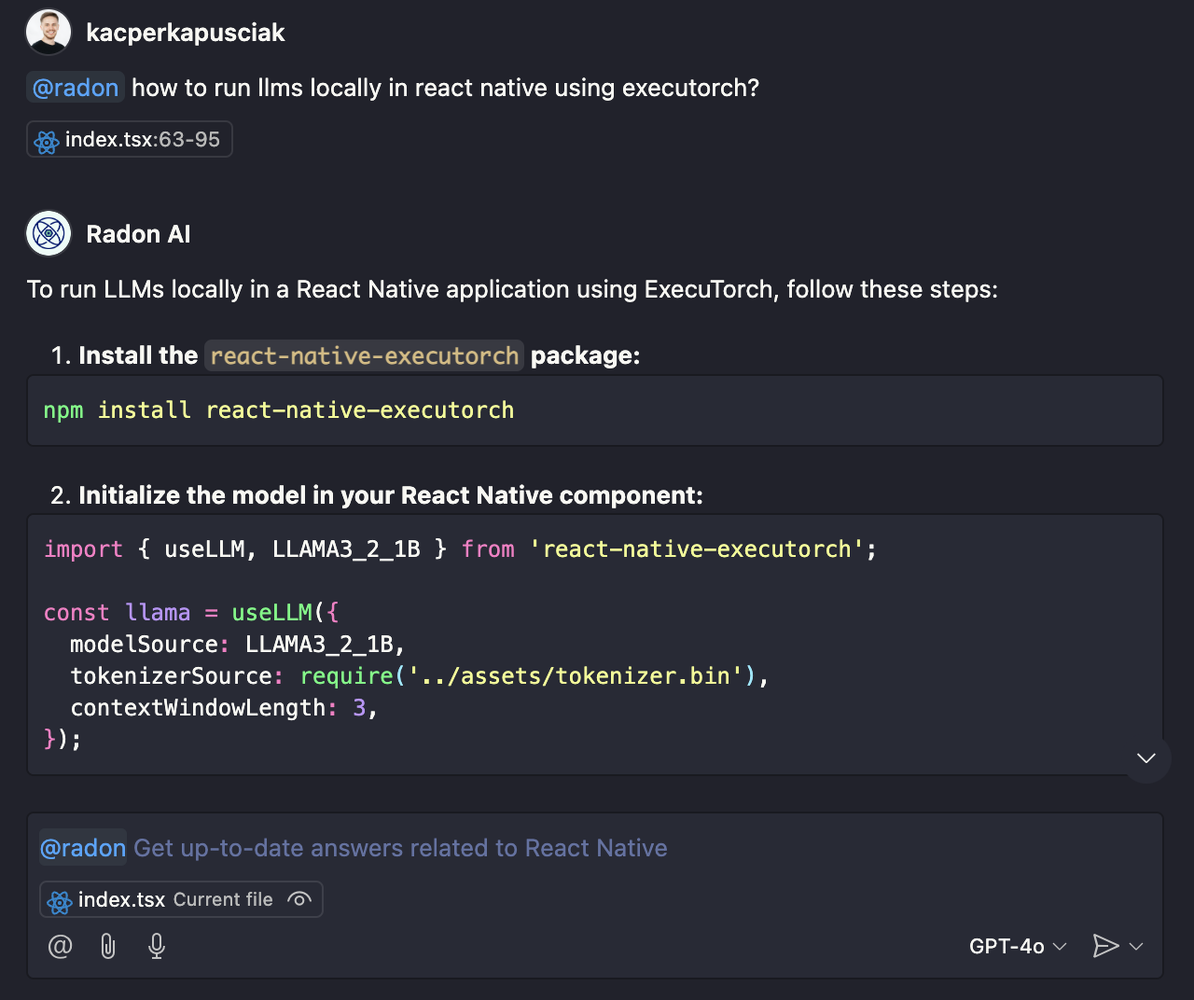

First things first, Radon 1.5 now supports React Native 0.79, but hold onto your seat—Radon’s new trick is Radon AI Chat. Yep, you heard it right. Now integrated with Radon, this AI-powered assistant makes debugging feel like you’re being guided by a hyper-intelligent sidekick. This tool pulls up-to-date React Native answers right inside VSCode.

And, like any good mid-season plot twist, EAS Build integration gets a much-needed upgrade too. Now, with Radon 1.5, you can fetch builds from EAS that match the fingerprint of your current project. So when you’re speeding through your dev workflow, Radon ensures it doesn’t go all “screw you, I’m gonna build everything from scratch.” Instead, it’s like, “Hey, here’s the exact build you need, ready to go.”.

Radon 1.5 is officially the next chapter in the Cramp Twins saga, and I’m pretty sure this third twin has some serious staying power. From EAS workflow improvements to Radon AI turning your IDE into an assistant straight out of a sci-fi movie, this update is packing heat. Season one might be winding down, but Radon is just getting started. Thanks to @swmansion.

React Native AI 0.1 is here, and it’s bringing the future of large language models (LLMs) right to your phone. Powered by Vercel’s AI SDK, this project lets you run LLMs directly on your device, opening up a world of possibilities for offline AI-powered features in your apps.

Gone are the days of calling up cloud services every time you need some smart text generation. With React Native AI 0.1, the magic happens on-device using MLC’s LLM Engine. This means faster responses, reduced latency, and, most importantly, keeping your user data off the cloud.

Under the hood, this uses the Vercel AI SDK to provide a unified API that works seamlessly with React Native. It doesn’t just throw models onto your device; it manages the whole process, including downloading, preparing, and running LLMs.

The models themselves? They’re local, which is great for performance and privacy. You can download and prepare your models on the fly, and the whole system is optimised for both iOS and Android. You won’t need to constantly rely on network requests, meaning better performance and a much smoother user experience.

But, as with any cutting-edge tech, there are some caveats. While the system’s designed for maximum efficiency, running LLMs locally means the device’s memory and processing power can become a limitation. You’ll need a bit of horsepower to make sure things run smoothly, but that’s something to keep in mind when choosing the model for your app.

If you’ve been dreaming of a world where your apps can process data locally, without external servers getting involved, this is a solid step toward that vision. It might not replace every cloud-based API just yet, but it’s a huge leap in the right direction.

Thanks to @callstackio for the flow of AI tools.

Managing multi-step flows in React Native just got smoother with Bottom Sheet Stepper. Built on top of @gorhom/bottom-sheet, this component allows you to create step-based navigation within a modal bottom sheet, making it ideal for onboarding processes, wizards, or custom forms.

Developed by @mehdi_made the library offers a lightweight and customizable solution for handling stepper functionality. It provides built-in support for navigation between steps using onNextPress, onBackPress, and an optional onEnd callback. This ensures a seamless user experience as they progress through each step.

The component leverages react-native-reanimated for smooth animations and offers full control over the layout and styling. You can customise the appearance to match your app’s design requirements, ensuring consistency across your application.

👉 Explore Bottom Sheet Stepper on GitHub

Have you been looking to add some celebratory flair to your React Native app? This package leverages the power of the GPU to render confetti animations, ensuring smooth performance even during intense animations.

Built on top of react-native-webgpu and TypeGPU, typegpu-confetti allows you to create customisable confetti effects without taxing the CPU. By offloading the rendering to the GPU, it ensures that your app remains responsive, even when displaying numerous particles simultaneously.

The library offers flexibility in its usage. You can integrate it using the Confetti component or the useConfetti hook, depending on your preference. The Confetti component allows you to specify the initial number of particles, while the useConfetti hook provides imperative control over the animation, enabling you to add particles dynamically as needed.

To enhance the animations, typegpu-confetti supports custom shaders written in WGSL (WebGPU Shading Language). This means you can define how the particles behave, their appearance, and their movement patterns.

It’s worth noting that to utilise the full capabilities of typegpu-confetti, you’ll need to set up react-native-wgpu and include the unplugin-typegpu in your project. These dependencies enable the GPU rendering and shader compilation, respectively.

👉 Explore typegpu-confetti on GitHub